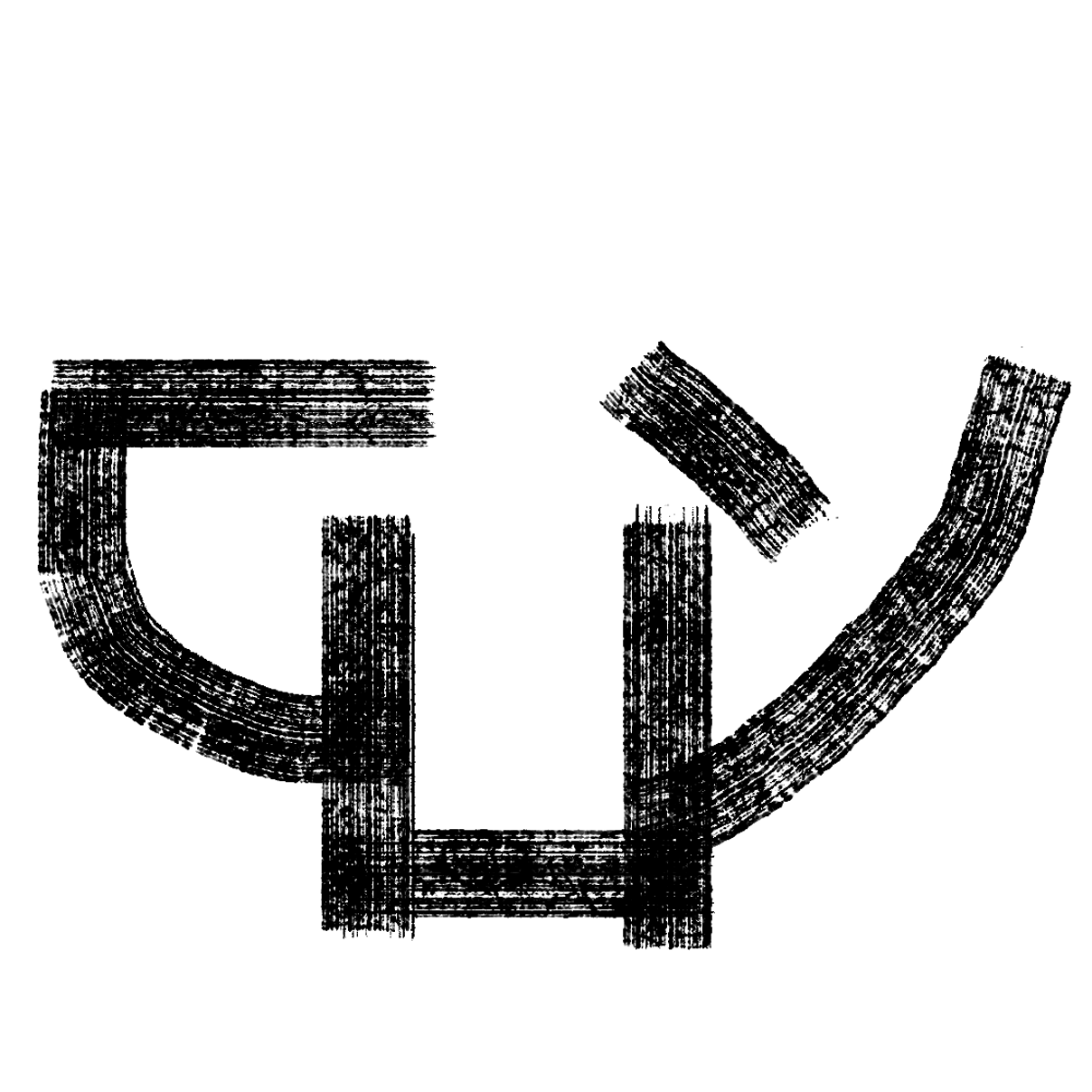

A sound installation presenting a study into graphic score and interactive, gestural composition practice, as part of a research for my Sonic Arts Master’s, somewhere between real-time composition and gestural experimental music notation.

The result is an experience in composing music with environmental samples’ manipulation, as a means of composing with elements that were familiar to most participants in an attempt to make the experience composing music more inclusive and render it more reachable for people not necessarily versed in the field of sound art. In the experimental graphic score notation, the installation is stretching out the borderline between visual arts, sound art, and performance art, raising basic questions, such as “what does music looks like?” and the process of composition and performance as two sides of the same coin.

The installation explores our perception modes and correlating listening modes’ in gestural composition and our “mental imaging” of visual cues into experimental notation systems

This work turns to the immediate environmental sounds found around southeast London. This search for meaning in the sounds of the environment leads back to soundscape composition and Barry Truax’s communications model, borrowed from cognitive science. I am questioning the form of visual scoring as a static creation, concentrating on the generalization of sound objects, allowing for a mixture between visual feedback (for interactive systems) and an actual score, which is arguably “playable”.

One of the main commonalities between these notation systems lies in their “qualitative imaginary comparatives”, venturing freely between physical shapes on paper and sounds propagation. Using mental similarities, which the players project onto the created graphic score, it retains some visual concepts of musical content embedded within it using simple geometrical shapes, mainly circles, ellipses, and straight lines. This intertwines the 3 levels of conceptualization: symbolism, signage and associative impulse.

All sounds are triggered in real-time by user interaction and are controlled and manipulated by it. By multiple juxtapositions of generative and interaction-based processes, the synthesized environment has a naturally occurring sound to it, extending the notion of soundscape composition.

Project supervised by Daniel James Ross